人工智能现在已经能够读取你的想法,并转化成语言和图像

|

《自然》(Nature)杂志最近发表的一篇文章重点介绍了一个新发现,该发现突破了人类想象力的边界,挑战了一些让人之所以为人的特有属性。这篇文章详细介绍了人工智能如何通过解读大脑信号,形成语言(文章甚至还提供了一段音频让你能亲自听一下)。对于那些无法说话的人来说,这是一项重大进步,因为这项技术创造了一种直接将思想转换成言语的途径。

这一发现的深意不仅仅在于语言的再创造:人工智能被用来破译脑电波,然后将神经冲动进行重组。虽然这项研究更关注的是构成语言的机械成分,例如直接的肌肉运动,但实验过程也包含从思维发展的早期阶段获取信息来组建语言,这些语言在70%的情况下都可以识别。换句话说,人工智能其实将人说话之前的动作代码进行了翻译。

人工智能通过阅读神经网络的输出,还可以重现另一种感观:视觉。例如,在最近的一项研究中,将机能性磁共振成像(fMRI)的数据与机器学习相结合,将大脑感知到的内容可视化。这种大脑活动的图像重建——通过人工智能进行转化——在计算机屏幕上重新创造的图像甚至连外行的观察者都认为是原始的视觉刺激物。

真正有意思的是:这些进步创造了一种可能性,由人工智能和技术(而非人类)担当媒介的直接沟通可以达到一种新水平。

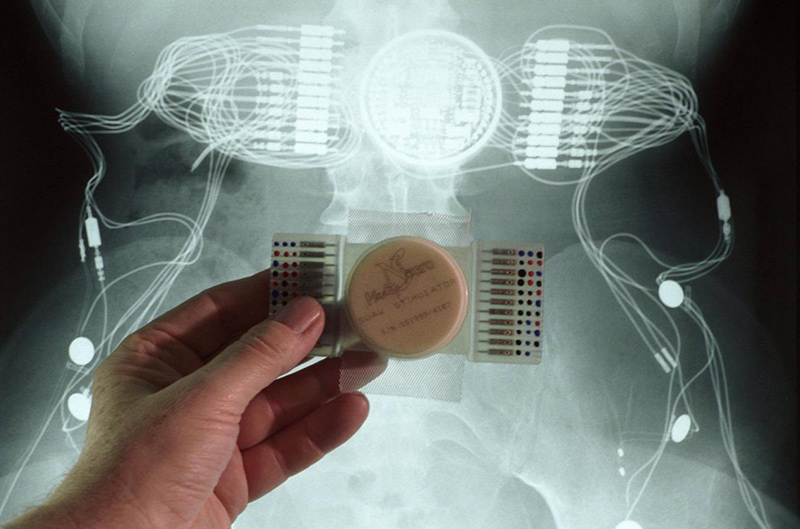

目前正在采取措施,将这种技术从研究过渡到现实生活中。现在正在动物身上测试电子网板的使用——一种放置在大脑中并与实际神经细胞隔离的柔性电路微观网络。埃隆·马斯克也参与了这项事业,研究如何处理直接发送给人脑或人脑直接发出的冲动。他的公司Neuralink目前正在使用神经花边技术开发计算机和大脑之间的接口——相当于一种细胞的基础架构,可以将微电极整合到大脑结构中。

未来,人与机器之间的区别将变得更加模糊:人工智能可能很快就会找到一个新家,不是作为一个外部设备,而更像是一个生活在我们体内的神经机械生物系统。目前,人们可以将语言和视觉编码成感官数据,未来还可能发明能与生物机体兼容的微型接口,这将推动生物学和技术发展开拓出新前景,届时人体部位和电子零件相结合,将能够超越细胞和电子的局限性。(财富中文网)

约翰·诺斯特是Nostalab的总裁。 译者:Agatha |

A recent article in Nature highlights a discovery that pushes the boundaries of our imaginations and challenges some of the very attributes that make us human. The piece details how artificial intelligence is creating speech by interpreting brain signals (and even offers an audio recording for a chance to hear it for yourself). It’s a key advancement for people who can’t speak because it provides a direct technologically-enabled path from thought to speech.

The implications of this discovery go beyond the recreation of speech: A.I. was used to decode brain waves and then reassemble neural impulses. While the focus of this study was on the mechanistic components of speech, such as direct muscle movement, it still acquired information from the early stages of thought development to construct words that were identifiable about 70% of the time. In other words, A.I. actually translated the code that makes up pre-speech.

A.I. has also enabled the recreation of another sense through the reading of neural output: vision. In a recent study, for instance, functional magnetic resonance imaging (fMRI) data was combined with machine learning to visualize perceptual content from the brain. Image reconstruction from this brain activity—which was translated by A.I.—recreated images on a computer screen that even the casual observer could recognize as the original visual stimuli.

But here’s where it gets really interesting: These advancements create the potential for a new level of direct communication mediated not by humans but by A.I. and technology.

Steps are currently being taken to transition such technology from research to real-life application. The utility of an electronic mesh—a microscopic network of flexible circuits that are placed into the brain and insulated with actual nerve cells—is being tested in animals now. Even Elon Musk has entered the endeavour of processing impulses directly to and from the human brain. His company Neuralink is currently developing an interface between the computer and brain using neural lace technology—a cellular infrastructure that allows microelectrodes to be incorporated into the structure of the brain itself.

What lies ahead is more of the blurred distinction between man and machine: A.I. may soon find a new home as less of an external device and more of a neuromechanical biological system that lives within our bodies. The codification of speech and vision into pre-sensory data and the potential creation of miniature, biologically-compatible interfaces will drive a new vista for biology and technology where the sum of the parts—human and electronic—combine to transcend the limitations of the cell and the electron.

John Nosta is president of NostaLab. |