这项科技会引领硅谷的下一次革命吗?

|

短短四年前,亚马逊还只是一家很成功的在线零售商,也是美国商用在线主机服务的主要供应商。此外它有也自己的消费电子产品,即人们熟知的Kindle电子书。Kindle虽然是一款大胆的作品,但考虑到亚马逊本身就是卖书起家,这一尝试自然是可以理解的。现在,亚马逊的Echo智能音箱和它的Alexa语音识别引擎又走进了很多家庭,可以说亚马逊在个人计算与通讯领域,已经掀起了自史蒂夫·乔布斯发布iPhone以来的最重要的技术革命。 一开始,它只不过是个看似新奇的小玩意儿。2014年11月,亚马逊发布了Echo智能音箱,它使用了人工智能技术来倾听人类的提问。Echo会扫描联网数据库中的数百万个单词,不论你提出的问题是深邃还是浅显,它都能给出答案。目前,Echo智能音箱的销量已达到4700多万台,其用户来自从阿尔巴尼亚到赞比亚的80多个国家,其服务器每天要回答用户的1.3亿多个问题。亚马逊的语音识别引擎Alexa得名于亚历山大港的古埃及图书馆,它可以按照用户的要求播放音乐,提供天气预报信息或体育比赛的得分,甚至可以远程调节用户家里的室温。它还会讲笑话,回答一些琐碎的问题,抖个机灵,或者开些无伤大雅的玩笑。(比如你可以让它放个屁来听听)。 亚马逊并没有“发明”语音识别技术,实际上语音识别技术已经发明出来几十年了。亚马逊甚至并不是第一家提供主流语音识别应用的科技巨头。苹果的Siri和谷歌语音助手的上市时间要比它早得多。微软Cortana的发布基本上与Alexa在同一时期。但是随着Echo的广泛成功,语音识别领域的竞争骤然激烈了起来,各大科技厂商纷纷投下重注,试图将这些“智能”家居设备变得跟PC甚至和智能手机一样重要。正如谷歌的搜索引擎算法彻底改变了人们的信息消费模式,进而颠覆了整个广告行业一样,由人工智能技术驱动的语音识别技术也会推动类似的革命。亚马逊Alexa部门的首席科学家罗希特·普拉萨德表示:“我们想抹平用户使用互联网时的不顺畅,而最自然的方法就是声音。Alexa不是那种一下子给你展示很多搜索结果,然后说‘选一个吧’的那种搜索引擎,而是会直接告诉你答案。” 各大科技厂商纷纷将人工智能与语音识别技术相结合,其目的远远不只是为了推出一款圣诞购物季最热卖的小家电这么简单。目前,谷歌、苹果、Facebook和微软等公司纷纷砸下重金研发竞品。据投资公司Loup Ventures的分析师吉恩·蒙斯特估算,上述几家科技巨头每年在语音识别技术上的研发支出合计超过了50亿美元,约占年度研发预算总额的10%。他认为,语音识别技术的出现是计算领域的一个“具有重大意义的变化”。他认为,语音指令很快将取代键盘和触屏,成为“我们与互联网交互的最常见的方式”。 随着各大厂商纷纷投入重注,语音识别助手领域的竞争也变得愈发激烈。从研究公司Canalys提供的数据看,目前亚马逊在这一领域暂时领先,它在全球联网音箱市场上的份额达到了42%。谷歌的Home智能家居设备以34%的份额暂居亚军,它搭载了谷歌自研的谷歌助手,据说近期的销量已经反超了亚马逊。苹果的HomePod价格最贵,加入战局也是最晚,虽然市场占有率排名第三,但份额仍远远不如前面两家。去年10月,Facebook也推出了自己的Portal系列影音设备,它们也具备部分语音识别功能。尤其值得注意的是,它搭载的也是亚马逊的Alexa语音识别引擎。 |

FOUR SHORT YEARS AGO, Amazon was merely a ferociously successful online retailer and the dominant provider of online web hosting for companies. It also sold its own line of consumer electronics devices, including the Kindle e-reader, a bold but understandably complimentary outgrowth of its pioneering role as a next-generation bookseller. Today, thanks to the ubiquitous Amazon Echo smart speaker and its Alexa voice-recognition engine, Amazon has sparked nothing less than the biggest shift in personal computing and communications since Steve Jobs unveiled the iPhone. It all seemed like such a novelty at first. In November 2014, Amazon debuted the Echo, a high-tech genie that uses artificial intelligence to listen to human queries, scan millions of words in an Internet-connected database, and provide answers from the profound to the mundane. Now, sales of some 47 million Echo devices later, Amazon responds to consumers in 80 countries, from Albania to Zambia, fielding an average of 130 million questions each day. Alexa, named for the ancient Egyptian library in Alexandria, can take musical requests, supply weather reports and sports scores, and remotely adjust a user’s thermostat. It can tell jokes; respond to trivia questions; and perform prosaic, even sophomoric, tricks. (Ask Alexa for a fart, if you must.) Amazon didn’t invent voice-recognition technology, which has been around for decades. It wasn’t even the first tech giant to offer a mainstream voice application. Apple’s Siri and Google’s Assistant predated Alexa by a few years, and Microsoft introduced Cortana around the same time as Alexa’s launch. But with the widespread success of the Echo, Amazon has touched off a fevered race to dominate the market for “smart” home devices by potentially making those objects as important as personal computers or even smartphones. Just as Google’s search algorithm revolutionized the consumption of information and upended the advertising industry, A.I.-driven voice computing promises a similar transformation. “We wanted to remove friction for our customers,” says Rohit Prasad, Amazon’s head scientist for Alexa, “and the most natural means was voice. It’s not merely a search engine with a bunch of results that says, ‘Choose one.’ It tells you the answer.” The powerful combination of A.I. with a new, voice-driven user experience makes this competition bigger than simply a battle for the hottest gadget offering come Christmastime—though it is that too. Google, Apple, Facebook, Microsoft, and others are all pouring money into competing products. In fact, Gene Munster of the investment firm Loup Ventures estimates that the tech giants are spending a combined 10% of their annual research-and-development budgets, more than $5 billion in total, on voice recognition. He calls the advent of voice technology a “monumental change” for computing, predicting that voice commands, not keyboards or phone screens, are fast becoming “the most common way we interact with the Internet.” With the stakes so high, it’s no surprise the competition is fierce. Amazon holds an early lead, with 42% of the global market for connected speakers, according to research firm Canalys. Google is making itself heard too. Its Echo look-alike line of Google Home devices powered by its Google Assistant has a 34% share and recently has been outselling Amazon. The pricey and later-to-the-game Apple HomePod is a distant third. And in October, Facebook unveiled its line of Portal audio and video devices, which do some but not all of the voice-recognition tasks of its mega-cap competitors—and, notably, is powered by Alexa. |

|

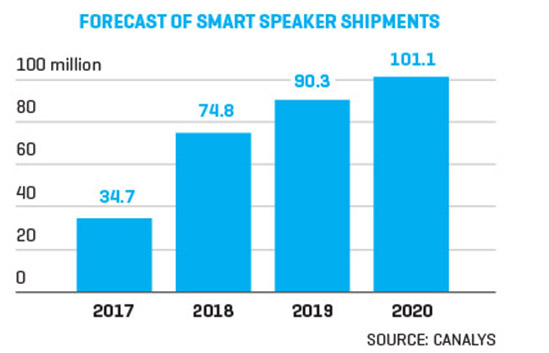

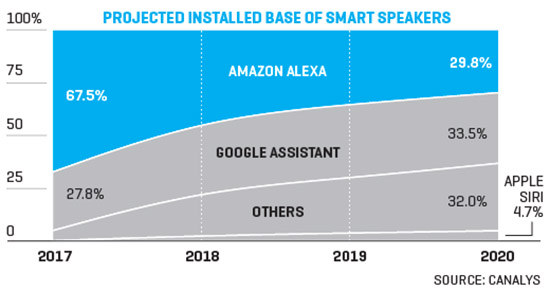

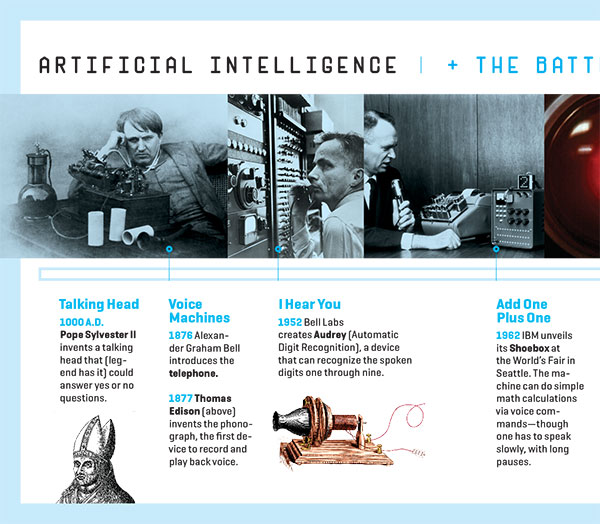

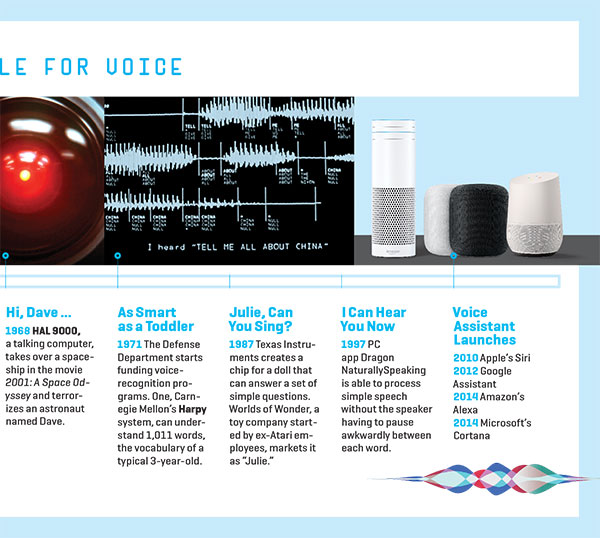

当前,联网智能音箱以及类似设备的市场规模已然不小,而且还在继续增长。不过对于这些科技巨头来说,语音识别技术的价值远远超过这些设备本身。据市场研究机构全球市场观察公司(Global Market Insights)的研究,2017年,全球智能音箱市场的销售额是45亿元,预计到2024年将增长至300亿美元。不过这几家科技巨头显然并不在乎卖硬件的这点小钱,比如亚马逊基本是在将Echo保本甚至亏本销售。在去年欧美地区的假日购物季期间,亚马逊推出了迷你版的Echo Dot音箱,售价只有29美元,ABI研究公司认为这个价格甚至还要低于它的零部件成本。各大厂商之所以肯做赔本生意,就是为了把用户锁定在他们的其它产品和服务上。比如亚马逊就是要通过Echo产品提高亚马逊Prime订阅服务的价值。谷歌则寄希望于语音搜索功能能够引来更多的广告收入。苹果则希望以语音识别技术为工具,将手机、电脑、电视遥控器甚至是车载软件整合在一块,打造一体化的体验。 由于语音识别领域已经吸引了这么多的投资,而且还在快速创新,因此现在预测谁是赢家还为时过早。但有一点大家已经形成了共识,那就是有了人工智能加成的语音识别技术,必然将向今天的智能手机一样,成为我们访问互联网的新用户界面。另外,语音识别技术也将降低人们使用科技的门槛,促进科技的普及。谷歌公司负责谷歌助手与搜索业务的产品与设计的副总裁尼克·福克斯表示:“它让那些不太识字的人也能使用这个系统。另外,人们在开车的时候也可以使用它,做饭的时候也可以用它来听菜谱。每过一段时间,科技就会发生一次结构性的转变。我们认为,语音识别就是这样一种转变。” 虽然如此,但今天的语音识别技术仍然处于比较早期的阶段。它的应用还比较初级,而且它也有一些比较大的风险因素。比如科技公司会不会利用它对用户进行窃听,以及科技公司通过收集公民的语音数据又攫取了多少权力,人们对这些问题都存在着合理的担忧。华盛顿大学电气工程学教授、世界顶级的语音和语言技术科学家玛丽·奥斯坦多夫表示:“有了人工智能语音识别技术,我们就好比从螺旋桨飞机进入了喷气式飞机时代。”她指出,现在的语音识别技术已经能够很好地回答那些直截了当的问题,但在真实语境的对话中,表现得仍然令人失望。“在能识别多少个单词、听懂多少个指令上,人工智能语音识别技术表现得非常出色。但我们毕竟还没进入火箭时代。” 几十年来,科技行业一直坚信,语音识别技术必将成为下一个“杀手级应用”。早在上世纪50年代,贝尔实验室就开发了一个名为奥黛丽(Audrey)的系统,它可以识别从1到9的语音数字。20世纪90年代时已经有了一款名叫Dragon NaturallySpeaking的PC软件,它可以实现简单的语音识别功能,而不需要说话者每说完一个单词就尴尬地停顿一会儿。但直到苹果公司2010年在iPhone上发布了Siri语音助手,消费者才意识到一个拥有强大计算能力的语音识别引擎能做哪些事。大约就在同一时间段,亚马逊这样一家充满了《星际迷航》式幻想的公司(它的老板杰夫·贝佐斯也是一个正牌《星际》迷)开始畅想,能不能将企业号星际飞船上的那种会说话的电脑变成现实。亚马逊公司的普拉萨德曾发表过上百篇关于语音识别人工智能及相关话题的科学文章,他表示:“在我们的畅想中,未来你可以通过语音与任何服务交互。”而Alexa就是为此而生的。它是一台多才多艺的设备,可以让消费者更容易地与亚马逊进行交互。 随着语音识别技术的进步——也就是计算速度越来越快,价格越来越便宜,越来越普及,因此日益主流化——亚马逊、谷歌、苹果等科技厂商也得以更容易地建立一个无缝的网络,利用语音识别技术,将智能家居设备与他们旗下的其他系统连接起来。比如苹果CarPlay的用户下班路上可以告诉Siri,别忘了在苹果电视上下载最新一集的《权力的游戏》,然后让HomePod等我一回家就开始播放。两年前,谷歌也发布了基于语音识别技术的智能家居产品Home,它将谷歌的音乐服务(YouTube)和最新款的Pixel系列手机和平板产品结合在了一起。换言之,每个科技巨头都将语音识别技术当作了连接其多个数码产品的纽带。 上述几个科技巨头个个都有超强的盈利能力,因此他们都有充足的资金来搞研究和营销,最终拿出的产品也各不相同。苹果和谷歌都有自己的移动操作系统,也就是说,iPhone和所有的安卓手机在出厂时就已预装了Siri或谷歌助手。相比之下,亚马逊就得说服用户将Alexa应用下载到他们的iPhone或安卓手机上了。前华尔街分析师蒙斯特认为:“要打开Alexa语音识别应用,就要比Siri和谷歌助手多花一步,这对亚马逊是一个明显的劣势。” 而相比之下,Siri和谷歌助手只需用户喊一声它们的名字就能激活。 不过,iOS和Android是面向所有第三方开发者的,而Alexa应用同时兼容这两个平台,也就是说,两个平台上的开发者都可以写Alexa的程序。亚马逊CEO杰夫·贝佐斯今年早些时候曾在一次财报发布会上称:“有来自150多个国家的数万名开发者”都在构建Alexa的应用程序,并将它们集成到非亚马逊的设备里。而合作伙伴也是各大语音识别应用竞争的一个竞争战场。现在,Sonos公司的“电声棒”、Jabra公司的耳机,以及宝马、福特、丰田等公司的汽车都已用上了Alexa。谷歌的语音识别程序则被集成到了索尼、铂傲的音响、August公司的智能门锁和飞利浦的LED照明系统上。苹果的HomPod则与First Alert公司的安全防卫系统和霍尼韦尔公司的智能恒温器进行了合作。谷歌副总裁尼克斯表示:“这些合作的好处是将语音识别功能整合到了整个智能家居生态系统,我不用打开手机也能使用应用程序了。我只要说一声:‘让我看看谁在门口’,门前的监控视频就会自动显示出来。总之,它通过统一实现了简化。” 人工智能一直是反乌托邦文化里的常客,特别是在《终结者》和《黑客帝国》系列电影里,智能机器人甚至造了人类的反,将人类逼到了“亡球灭种”的边缘。不过庆幸的是,现在的我们离被机器人奴役还有很远。不过人工智能技术的进步,以及廉价计算设备的普及,已经让很多具有科幻感的构思成为了现实。早期的语音识别程序虽然也不错,但也没有超过编写它们的程序员的最高水平。但现在这些应用却变得越来越好了,这是因为它们通过互联网与数据中心连接,而且科技公司花了好几年时间,用大量数据对这些算法进行“训练”,使其学会了识别不同的语言模式。现在,这些人工智能语音识别应用不仅能识别单词、方言和俗语,甚至还能根据上下文分析语义(比如通过分析呼叫中心的客服代表与客户的电话录音,或者分析用户与数字助手的互动)。 |

The current market for connected speakers and similar gadgets is big and growing—but not necessarily the most dramatic voice-related opportunity for the tech titans. Global Market Insights, a research firm, pegs global 2017 smart-speaker sales at $4.5 billion, a number it projects will grow to $30 billion by 2024. The hardware revenues, however, are largely beside the point. Amazon, for example, has sold the Echo at breakeven or less. Last holiday season it offered the bare-bones Echo Dot for $29, which ABI Research reckons is less than the cost of the device’s parts. Instead, each major player has a strategy that in some way feeds its larger goal of locking in customers to its other goods and services. Amazon, for one, uses the Echo line to increase the value of its Amazon Prime subscription service. Google hopes voice searches will eventually boost the already massive trove of data that feeds its advertising franchise. With Siri, Apple sees a way to tie together its phones, computers, TV controllers, and even the software that automakers are tying into their onboard systems. It’s too soon to predict a winner, what with all the investment and fast-moving innovations. But it’s safe to say the industry has coalesced around the notion that voice technology, enhanced by recent advancements in artificial intelligence, is the user interface of tomorrow. And it promises to have a democratizing impact on an industry that has separated novices from experts. “Voice enables all kinds of things,” says Nick Fox, a Google vice president who oversees product and design for the Google Assistant and Search. “It enables people who are less literate to use the system. It enables people who are driving. It enables people while cooking to hear a recipe. Every once in a while there is a tectonic shift in technology, and we think voice is one of those.” For all that, voice recognition remains in its infancy. Its applications are rudimentary compared with where researchers expect them to go, and there’s a significant ick factor associated with voice. Legitimate concerns linger as to how much the tech companies are eavesdropping on their customers—and how much power they are accumulating in the form of data derived from the spoken information they are collecting. “With A.I. voice recognition, we’ve gone from the age of the biplane to the age of the jet plane,” says Mari Ostendorf, a professor of electrical engineering at the University of Washington and one of the world’s top scientists on speech and language technology. She notes that computers have gotten good at answering straightforward questions but still are relatively hopeless when it comes to actual dialogue. “It’s truly impressive what Big Tech has done in terms of how many words voice A.I. can now recognize and the number of commands it can understand. But we’re not in the rocket era yet.” VOICE RECOGNITION HAS BEEN the next killer app for decades. In the 1950s, Bell Labs created a system called Audrey that could recognize the spoken digits one through nine. In the 1990s, PC users installed Dragon NaturallySpeaking, a program that could process simple speech without the speaker having to pause awkwardly after each word. But it wasn’t until Apple unleashed Siri on the iPhone in 2010 that consumers got a sense of what a voice-recognition engine tied to massive computing power could accomplish. Around the same time, Amazon, a company full of Star Trek aficionados—and led by a true Trekkie in CEO Jeff Bezos—began dreaming about replicating the talking computer aboard the Starship Enterprise. “We imagined a future where you could interact with any service through voice,” says Amazon’s Prasad, who has published more than 100 scientific articles on conversational A.I. and other topics. The result was Alexa, a multifaceted device designed to let consumers communicate more easily with Amazon. As voice recognition improves—which it does as computing power gets faster, cheaper, more ubiquitous, and thus more mainstream—Amazon, Google, Apple, and others can more easily build a seamless network where voice links their smart home devices with other systems. It’s possible for Apple CarPlay users, for example, to tell Siri on the drive home to slot the latest episode of Game of Thrones as “up next” on their Apple TV and to command their HomePod to play it once they’ve arrived. Two years ago, Google released its voice-enabled Home that ties together its music offerings, YouTube, and its latest Pixel phones and tablets. Each tech giant, in other words, sees voice as a tether to the myriad digital products it is creating. The combatants, each wildly profitable and therefore able to fund ample research and marketing efforts, bring different assets to the table. Apple and Google, for example, own the two dominant mobile operating systems, iOS and Android, respectively. That means Siri and Google Assistant come preinstalled on nearly all new phones. Amazon, in contrast, needs to get consumers to install and then open the Alexa app on their iPhones or Android devices. “The extra step to open the Alexa voice app puts Amazon at a distinct disadvantage,” says Loup’s Munster, formerly a Wall Street analyst of computer companies. By contrast, all that’s required to activate Siri and the Google Assistant is to say their names. That said, iOS and Android are open to third-party developers of all stripes, and Amazon is one of them¬—meaning that nothing is stopping developers on both platforms from writing Alexa programs. Bezos bragged in an earnings release earlier this year that “tens of thousands of developers across more than 150 countries” are building Alexa apps and incorporating them into non-Amazon devices. Indeed, partnerships are a key battleground for voice applications. Alexa is built into “soundbars” from Sonos, headphones from Jabra, and cars from BMW, Ford, and Toyota. Google boasts integrations with audio equipment makers Sony and Bang & Olufsen, August smart locks, and Philips LED lighting systems, and Apple has partnerships that allow its HomePod to work with First Alert Security systems and Honeywell smart thermostats. “The beauty of these partnerships,” says Google’s Fox, “is that they allow us to link voice into the whole smart-appliance ecosystem. I don’t have to open my phone and go to an app. I can just say to the device, ‘Show me who’s at my front door,’ and it will pop right up. It’s simplifying by unifying.” Artificial intelligence has long been a staple of dystopian popular culture, notably from films such as The Terminator and The Matrix, where wickedly clever machines rise up and pose a threat to humankind. Thankfully, we’re not there yet, but advances in A.I. and the availability of cheap computing have made impressively futuristic applications a reality. Early voice-recognition programs were only as good as the programmers who wrote them. Now these apps keep getting better because they are connected through the Internet to data centers. These complex mathematical models sift through huge amounts of data that companies have spent years compiling and learn to recognize different speech patterns. They can recognize vocabulary, regional accents, colloquialisms, and the context of conversations by analyzing, for example, recordings of call-center agents talking with customers or interactions with a digital assistant. |

|

语音识别系统既依赖于计算机科学,也依赖于物理学。语音会产生空气振动,语音引擎则会接受模拟声波,然后将其转换成数字格式,计算机就会分析这些数据的意义,而人工智能则能够加快这一过程。人工智能首先要搞清楚它收到的语音是不是指向它的系统的,因此它首先要检测客户选定的“唤醒词”,比如“Alexa”。然后,系统会使用机器学习模型,对所接受的数据进行猜测。由于这个模型已经用几百万个用户贡献的语料库训练过,因此猜测的准确度是很高的。谷歌助手的工程副总裁约翰·斯考威克解释道:“语音识别系统首先会识别声音,然后会把这句话放到语境中去理解。比如说,如果我说了一句:‘天气怎么样?’系统就知道,我所指的是一个国家或一个城市的天气。我们的数据库中有500万个单词的英文词汇,如果不结合语境,从500万个单词中识别出一个词是极其困难的。但如果人工智能知道你问的是一个城市的情况,那么这就把范围缩小到了三万分之一,这样猜中就简单多了。” 有了强大的计算能力,系统就有了很多学习的机会。举个真实的例子,为了让Alexa打开家里的微波炉,语音识别引擎首先要理解这个指令。也就是说,它得能够听懂各州各省的方言,小孩子的高调门儿,或者是老外的怪腔怪调。与此同时,它还要过滤广播、音乐等无关的背景音。然后,人们使用微波炉时的指令也是不一样的。有人可能会说:“把我的饭重新热一下”;有人则可能说:“打开微波炉”或“用微波炉把饭热两分钟。”Alexa这种语音识别应用会将用户的问题与数据库中的类似指令进行对比,从而明白“把我的饭重新热一下”也是用户有可能下的指令。 语音识别技术之所以近来大受欢迎,也是由于它在将人类指令转化为行动方面表现得相当出色。谷歌公司的斯考威克表示,谷歌的语音识别引擎已经能达到95%的准确率,比2013年的80%有了明显提高,几乎与人类的理解能力不相上下了。近来该领域的一个重大成绩是语音识别引擎已经学会了如何过滤背景噪音。不过只有当用户的指令或问题比较简单时,系统才能达到这样高的识别率——比如问它:“最新的《谍中谍6》什么时候上映?”如果你就某件事征求Alexa或谷歌助手的意见,或是试图跟它进行一场拉锯式的谈话,系统就要么会给出一个预先编程好的幽默答案,要么直接提出抗议:“我不知道怎么回答。” 在消费者看来,语音识别设备不仅实用,有时也能给人带来快乐。而在制造它们的科技巨头看来,语音识别设备虽小,但是极为高效的收集数据者。大约60%的亚马逊Echo和谷歌Home的用户至少将语音助手与一种智能家居设备相连(比如恒温器、安全系统等),而这些智能家居设备可以透露关于用户生活的无数细节。对于亚马逊、谷歌和苹果这些公司,他们收集的数据越多,就能更好地服务消费者——不管是通过附加服务、订阅服务,还是代表其他商家打广告。 这个领域的商机也是显而易见的。一位消费者只要将Echo与恒温器相连,那么如果他看到了智能照明系统的广告,就也会倾向于购买。如果你对隐私特别在意,你或许会觉得被“窃听”的感觉很不舒服。但借助这项技术,科技巨头们已经坐拥了海量个人数据,反过来这些数据也使他们能更有效地向消费者进行营销。 这几家科技巨头的总体战略各不相同,对收集来的数据的使用方式也略有差异。亚马逊表示,Alexa收集来的数据主要用于该软件的后续研发,以使它变得更加智能,对用户更加实用。亚马逊称,Alexa进化得越好,用户就会越能看到亚马逊的产品和服务的价值——包括它的Prime会员计划。尽管亚马逊也在大力推动广告业务(市场研究机构eMarketer认为,2018年亚马逊的数字广告业务收入将达到46.1亿美元),但亚马逊的一位发言人表示,公司目前不会利用Alexa的数据卖广告。谷歌虽然拥有庞大的广告业务,却也一反常态地表示,不会使用语音识别技术收集的数据卖广告。苹果向来号称不愿利用顾客数据换取商业利益,此次自然也不例外,苹果表示,该公司从语音识别技术中获取的用户数据将仅仅用于改善用户体验——以及销售更多昂贵的HomePod设备。 虽然亚马逊是做购物起家的,但大多数用户并未使用语音识别设备帮助他们购物。亚马逊不愿透露有多少Echo的用户用它购物,不过咨询机构Codex集团最近对网购图书者的一项调查显示,只有8%的用户通过Echo买过书,有13%的用户通过它听过电子书。研究机构Canalys的分析师文森特·蒂尔克表示:“人是习惯性动物,如果你想买一个咖啡杯,你很难对智能音箱描述出你喜欢的杯子的样式。” 亚马逊表示,公司并未过分关注Echo作为购物助手的作用,不过它仍然希望亚马逊的智能家居设备能反哺公司的零售业务。亚马逊的自然语言处理科学家普拉萨德表示:“人总是根据以前的购物习惯去购物。如果你想买几节电池,这种东西,你既不需要亲眼去挑,也不需要记住买一种。如果以前你从没买过电池,我们当然会建议你买亚马逊品牌的。” 语音助手在购物上的作用远远不止买几节电池。目前,很多商家都想跟这些科技巨头合作,并利用这些平台。据OC&C战略咨询公司预测,到2022年,语音识别购物的销售额将从现在的20亿美元增长至400亿美元。现在,有几款智能家居设备的迭代产品已经展现了这个潜力。比如亚马逊和谷歌都推出了带屏幕的智能家居设备,它们看起来有点像小型电脑和电视机的跨界产品,因此更适合用来网购。2017年春天,亚马逊推出了230美元的Echo Show。跟其他Echo设备一样,Echo Show也内置了Alexa应用,但用户也能通过它看到图像。这样一来,消费者就可以看见自己想买的商品和购物清单了。同时,用户也可以用它来看电视、听音乐、看监控视频、旅行照片等等。而在做这些的时候,用户无需点击任何一个按键,也完全不需要操纵鼠标。 谷歌已经与四家消费电子厂商展开了合作,有些厂商最近已经开售安装了谷歌助手的智能屏产品。比如联想的Smart Display智能显示器看起来很像Facebook的Portal产品,零售价为250美元,与JBL的Link View设备相同。LG也计划推出搭载谷歌助手的ThinQ View设备。今年10月,谷歌也开始销售自己Home Hub设备了,该设备搭载了一块7寸显示屏,售价为149美元。 从长远来看,谷歌认为,拥有屏幕将使语音购物变得更容易。谷歌并不像亚马逊那样直接销售产品,但它的“谷歌购物”网站却将零售商与谷歌搜索引擎直接相连。目前,谷歌已经将Home设备打造成一个购物工具了。比如谷歌与星巴克有合作,用户只需要告诉谷歌助手点一杯“老样子”,饮品就会自动送上门。去年,谷歌还巩固了与全球最大零售商沃尔玛的合作关系。用户可将沃尔玛账户与谷歌购物网站相连,这样通过谷歌的Home设备,用户即可检查附近的沃尔玛门店里有没有自己喜欢的运动鞋,或是预订一台平板电视当日提取。如果你不知道离你最近的沃尔玛在哪儿,它也能帮你找到。 而视觉识别技术(它可以看作是人工智能语音识别技术的小弟,这种技术早就被用来在人群中对比罪犯了)的兴起,将使人们在这些设备上购物变得更加便利。今年9月,亚马逊宣布,它正在用Snapchat相机测试一款新应用。消费只要用Snapchat的相机拍下某个产品或者条形码的照片,就能在屏幕上看到亚马逊的产品页面。不难想象,要不了多久,用户就能在他们Echo Show上实现类似功能,到时候用户不光能看见产品的价格和评价,估计还能看见该产品是否支持Prime的两天免费快递上门服务。 虽然这项技术的前景令人兴奋,可是对那些对高科技不敏感的人来说,他们可能得花一些时间,才能习惯跟机器对话。现在很多科技公司的社会公信力不高,他们必须得让消费者相信,这些设备并不是在出于邪恶的原因在窃听他们。实际上,智能扬声器只有检测到“唤醒词”才会切换到对话模式,比如“Alexa”或者“Hey Google”。今年5月,亚马逊不小心将一位波特兰市的高管与他妻子关于地板的一段对话发送给了他的一名员工。亚马逊对此次事故公开道歉,并表示它“曲解”了这段对话。 口头指令的出错可能要远远超过打字输入的命令。有些时候,你甚至可能为此付出代价。比如去年,达拉斯的一个6岁的小女孩在跟Alexa讨论饼干和玩偶等话题。几天后,快递员就给她家送来了4磅饼干和一个价值170美元的玩偶。亚马逊表示,Alexa是有家长控制功有的,如果启用了该功能,这次事故本不会发生。 不管怎样,人工智能语音识别的大规模采用很可能会是自然而然的事,毕竟它给我们带来了更多的便利。目前,全球的人工智能语音识别设备已经超过1亿台,语音成为人与机器的主要交互媒介只不过是个时间问题——哪怕有时这种对话只是毫无营养的恶搞和尬笑。(财富中文网) 本文作者布莱恩·杜梅因撰写的关于亚马逊的一本新书即将由斯克里布纳出版社出版。 本文原载于2018年11月1日刊的《财富》杂志。 译者:朴成奎 |

Voice-recognition systems rely as much on physics as on computer science. Speech creates vibrations in the air, which voice engines pick up as analog sound waves and then translate into a digital format. Computers can then analyze that digital data for meaning. Artificial intelligence turbocharges the process by first figuring out whether the sound is directed toward its systems by detecting a customer-chosen “wake word” such as “Alexa.” Then they use machine-learning models trained by what millions of other customers have said to them before to make highly accurate guesses as to what was said. “A voice-recognition system first recognizes the sound, and then it puts the words in context,” explains Johan Schalkwyk, an engineering vice president for the Google Assistant. “If I say, ‘What’s the weather in …,’ the A.I. knows that the next word is a country or a city. We have a 5-million-word English vocabulary in our database, and to recognize one word out of 5 million without context is a super hard problem. If the A.I. knows you’re asking about a city, then it’s only a one-in-30,000 task, which is much easier to get right.” Computing power allows the systems multiple opportunities to learn. In order to ask Alexa to turn on the microwave—a real example—the voice engine first needs to understand the command. That means learning to decipher thick Southern accents (“MAH-¬cruhwave”), high-pitched kids’ voices, non¬-native speakers, and so on, while at the same time filtering out background noise like song lyrics playing on the radio. It then has to understand the many ways people might ask to use the microwave: “Reheat my food,” “Turn on my microwave,” “Nuke the food for two minutes.” Alexa and other voice assistants match questions with similar commands in the database, thereby “learning” that “reheat my food” is how a particular user is likely to ask in the future. The technology has taken off in part because it has gotten so proficient at translating human commands into action. Google’s Schalkwyk says his company’s voice engine now responds with 95% accuracy, up from only 80% in 2013—about the same so-so level of accuracy human listeners achieve. One of the great recent triumphs in the field has been teaching the engines to filter out nonspoken background noise, a distraction that can frustrate the keenest human ear. These systems reach this level, however, only when the question is simple, like, “What time is Mission: Impossible playing?” Ask the Google Assistant or Alexa for an opinion or try to have an extended back-and-forth conversation, and the machine is likely to give either a jokey preprogrammed answer or to simply demur: “Hmm, I don’t know that one.” TO CONSUMERS, voice-driven gadgets are helpful and sometimes entertaining “assistants.” For the tech giants that make them—and keep them connected to the computers in their data centers—they’re tiny but extremely efficient data collectors. About 60% of Amazon Echo and Google Home users have at least one household accessory, such as a thermostat, security system, or appliance, connected to them, according to Consumer Intelligence Research Partners. A voice-powered home accessory can record endless facts about a user’s daily life. And the more data Amazon, Google, and Apple can accumulate, the better they can serve those consumers, whether through additional devices, subscription services, or advertising on behalf of other merchants. The commercial opportunities are straightforward. A consumer who connects an Echo to his thermostat might be receptive to an offer to buy a smart lighting system. Creepy though it may sound to privacy advocates, the tech giants are sitting on top of a treasure trove of personal data, the better with which to market more efficiently to consumers. As with their overall strategies, the tech giants have different approaches to the data they collect. Amazon says it uses data from Alexa to make the software smarter and more useful to its customers. The better Alexa becomes, the company claims, the more customers will see the value of its products and services, including its Prime membership program. Although Amazon is making a big push into advertising—the research firm eMarketer projects the company will pull in $4.61 billion from digital advertising in 2018—a spokesperson says it does not currently use Alexa data to sell ads. Google, counterintuitively, considering its giant ad business, also isn’t positioning voice as an ad opportunity—yet. Apple, which loudly plays up the virtue of its unwillingness to exploit customer data for commercial gain, claims to be approaching voice merely as a way to improve the experience of its users and to sell more of its expensive HomePods. DESPITE ONE OF AMAZON’S early selling points, what people aren’t asking their devices to do is help them shop. Amazon won’t comment on how many Echo users shop with the device, but a recent survey of book buyers by consulting firm the Codex Group suggests that it’s still early days. It found that only 8% used the Echo to buy a book, while 13% used it to listen to audiobooks. “People are creatures of habit,” says Vincent Thielke, an analyst with research firm Canalys, which focuses on tech. “When you’re looking to buy a coffee cup, it’s hard to describe what you want to a smart speaker.” Amazon does say it’s not overly fixated on the Echo as a shopping aid, especially given how the device ties in with the other services it offers through its Prime subscription. Still, it holds out hope the Amazon-optimized computers it has placed in customers’ homes will boost its retail business. “What is available for shopping is your buying history,” says Amazon’s Prasad, the natural-language-processing scientist. “If you want to buy double-A batteries, you don’t need to see them, and you don’t need to remember which ones. If you’ve never bought batteries before, we will suggest Amazon’s brand, of course.” The potential to boost shopping remains far bigger than selling replacement batteries, especially because so many merchants will want to partner with—and take advantage of—the platforms associated with the tech giants. The research firm OC&C Strategy Consultants predicts that voice shopping sales from Echo, Google Home, and their ilk will reach $40 billion by 2022—up from $2 billion today. A critical evolution of the speakers helps explain the promise. Both Amazon and Google now offer smart home devices with screens, which make the gadgets feel more like a cross between small computers and television sets and thus better for online shopping. Amazon launched the $230 Echo Show in the spring of 2017. Like other Echo devices, the Show has ¬Alexa embedded, but it also enables users to see images. That means shoppers can see the products they are ordering as well as their shopping lists, TV shows, music lyrics, feeds from security cameras, and photos from that vacation in Montana, all without pushing any buttons or manipulating a computer mouse. For its part, Google has partnered with four consumer electronics manufacturers, some of which have recently started selling smart screens integrated with the Google Assistant. The Lenovo Smart Display, for example, looks a lot like Facebook’s new Portal and retails for $250, the same price as the JBL Link View. LG plans to launch the ThinQ View. In October, Google started selling its own version, the Home Hub, for $149, with a seven-inch screen. In the long run, Google is betting that having a screen will make voice shopping easier. The search company doesn’t sell products directly like Amazon, but its Google Shopping site connects retailers to the Google search engine. Already it is empowering the Google Home device as a shopping tool. It has a partnership with Starbucks, for example, that enables a user to tell the Google Assistant to order “my usual,” and the order will be ready upon arrival. Last year, Google cemented a partnership with Walmart, the world’s largest retailer. Shoppers can link their existing Walmart online account to Google’s shopping site and simply ask Google Home to check whether a favorite pair of running shoes is in stock, reserve a flat-screen TV for same-day pickup, or find the nearest Walmart store. The rise of vision-recognition tech—voice recognition’s A.I. sibling, long used for matching faces of criminals in a crowd—will make shopping on these devices even more convenient. In September, Amazon announced it was testing with Snapchat an app that enables shoppers to take a picture of a product or a bar code with Snapchat’s camera and then see an Amazon product page on the screen. It’s not hard to imagine that the next step for shoppers will be to use the camera embedded in the Echo Show to snap a picture of something they’d like to buy and then see onscreen the same or similar items along with prices, ratings, and whether they’re available for Prime two-day free shipping. EXCITING AS THIS technology is, it may take non¬technophiles a bit of time to get used to speaking to machines. The tech giants aren’t the most trusted of companies right now, and they’ll need to convince consumers their devices aren’t eavesdropping for nefarious reasons. Smart speakers are supposed to click into listen mode only when they detect “wake words,” such as “Alexa,” or “Hey, Google.” In May, Amazon mistakenly sent a conversation about hardwood floors that a Portland executive was having with his wife to one of his employees. Amazon publicly apologized for the snafu, saying it had “misinterpreted” the conversation. The spoken word has the potential for errors far beyond that of typed commands. This can have commercial repercussions. Last year a 6-year-old Dallas girl was talking to Alexa about cookies and dollhouses, and days later, four pounds of cookies and a $170 dollhouse were delivered to her family’s door. Amazon says Alexa has parental controls that, if used, would have prevented the incident. Still, widespread adoption is likely because of the growing convenience of a voice-¬connected world. With more than 100 million of these devices already installed and in listening mode, it’s only a matter of time before voice becomes the dominant way humans and machines communicate with each other—even if the conversation involves little more than scatological sounds and squeals of laughter. Brian Dumaine is the author of a forthcoming book on Amazon to be published by Scribner. This article originally appeared in the November 1, 2018 issue of Fortune. |